Jaguar Land Rover

Driver Situational Awareness

Balancing assistance versus distraction.

As a modern day driver, we are blessed and cursed with a plethora of advance driving assist features. Although their intentions are noble, too often they are confusing to use, understand, and most importantly, react to. As Jaguar Land Rover looks forward in developing their fully autonomous driving system, our team was tasked with breaking down the communication barrier between car and driver. Rather than just pile on to an already convoluted language of icons and beeps, our team studied driving behavior to better incorporate automation with the driving task that’s riddled with split second decisions.

Details

-

Aishwarya Dighe - Lead Product Owner

Ethan Nguyen - Lead Product Designer

Bobbie Seppelt - Lead Researcher

Kristin Foster - Agile Lead

Matthew Mercer - Senior UX Designer

Jules Haas - Senior UX Designer

Sebastian Priss - UX Designer

Peter Arnold - Software Engineer

Tim Hinton - Motion Designer

Severn Durand - Lead Systems Engineer -

Research was the foundation and basis for all of our decision making and feature prioritization process. We deployed a multitude of methods to gather data from all relevant avenues.

First, we reviewed any existing research data that was available, taking in consideration that some data may be outdated. Next, our team test drove the most advance driver assisted vehicles on the market and documented our experience. By doing such, we were able to get a broader picture of the current driving landscape.

From there, we conducted several types of user tests. These include user surveys based on videos and images, in-car driving with eye gaze monitoring glasses, and building a custom driving simulation to test our hypothesis.

Meanwhile, we coordinated with engineers, safety feature teams, and Nvidia to implement our new designs within the product roadmap.

-

Our research led to a comprehensive platform and inclusive design for the new Jaguar Land Rover Active Driver Assistance System in all our new vehicles.

Prioritized easy access to commonly used features

Better parity between system icons and active driving display

Visuals on driving display are color coded but not color dependent for understanding

Feature groupings based on commute type

Easy to understand feature progression

Prioritization of system warnings visually and audibly

Contextually display objects in the active driving scene

Contextual use of haptics, speakers and screens for different levels of driving autonomy

Higher level of customer appreciation and understanding of features

Our Work in Action

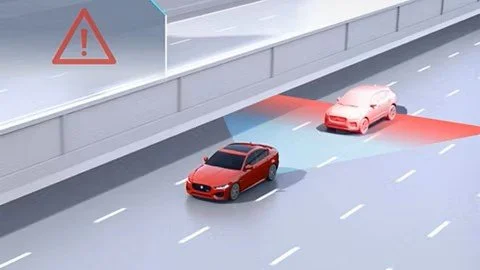

Lateral Driving Assist

Most drivers understandably could not distinguish the difference between lane detection, lane departure warning, blind spot warning, lane keep assistance, and lane centering. We proposed a new design for communicating how these features build on top of one another, and automatically recommending features based on the type of driving environment and commute. For example, we only would recommend lane centering on long highway drives and not grocery runs.

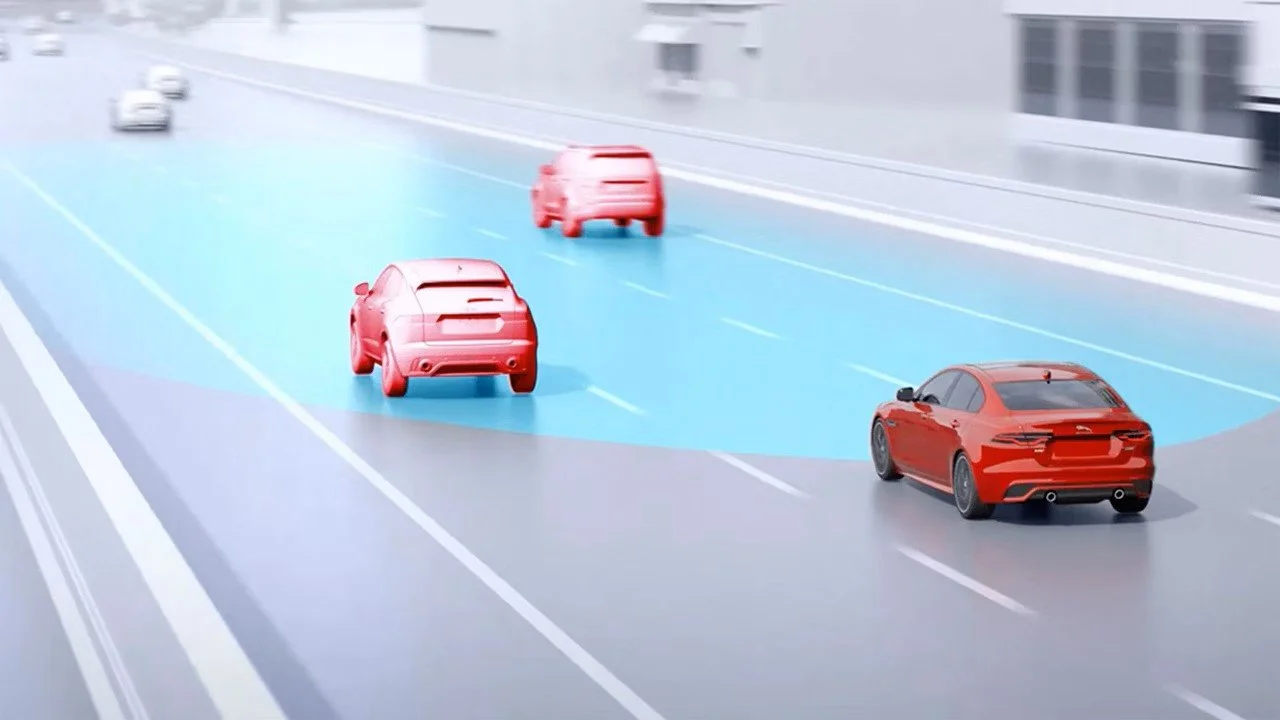

Longitudinal Driving Assist

A feature rich package of; front and rear collision prevention, adaptive cruise control, speed adaptation to road conditions and topography, and fully autonomous driving, longitudinal driving assist can be overwhelming to drivers. Building drivers’ trust of the features, but also an understanding of its limitations are crucial for safe and regular use of these features.

We leveraged Nvidia’s predictive AI to limit the objects shown on the display to objects that are most likely to effect the driving situation. We carefully balanced the time the drivers eyes are on the road versus the display. We also employed a dynamic camera angle in the display based on vehicle speed and driving situation. This allows us to maximize the screen real estate, display objects at a farther distance at faster speeds, but also adapt to fast approaching threats from the sides and rear.

Levels of Autonomous Driving

With the A.I. race reaching across all industries, sometimes the focus can be more about bragging rights and marketability rather than user needs and safety. While lawmakers and regulators struggle to keep up with the flood of the technology to the market, car manufacturers are left to determine the best approach to deploying different levels of autonomous driving to stay competitive.

Our team was responsible with the crucial task of creating requirements and thresholds of autonomy at which the vehicle can offer autonomous control. This required extensive study of driving behaviors in a variety of different driving conditions. We also tested language and animations to show transitions in and out of the computer’s driving domain. For example, monitoring the driver’s eyes for attention and awareness, and the appropriate steps to alert and hand the driving task back to the driver.

Related Work

-

Women's Healthcare Associates

WHA recognizes that womanhood means different things to different people. That each women’s journey is uniquely her own. They also recognize that nearly 80 percent of people search online for medical and health information. The new mobile-friendly website that we developed for WHA would allow users to search for general information, health topics, providers, and offices. Patients are provided contextualized information based on the stages of their life—from puberty to planning a family to menopause.

-

American Airlines Cargo

America’s best air transportation company needed to bring their robust desktop application into the mobile space to meet their consumer’s needs. From finding international routes to monitoring cargo temperature, we built an app that provided customers deep insight into their shipment right on their Android devices.